Diagnose Latent Cytomegalovirus Using CyTOF Data and Deep Learning

Introduction¶

A deep neural network (a.k.a. deep learning) is an artificial neural network with multiple layers between the input and output layers. It was proven to be highly effective for a variety of predictive tasks. In health care, deep learning is quickly gaining popularity and has been implemented for applications such as image-based diagnosis and personalized drug recommendations. In this tutorial, we will build a tailored deep-learning model for CyTOF data to diagnosis latent Cytomegalovirus infection using Keras and TensorFlow. To run this tutorial, download the github repository and run the jupyter notebook.

Step 1: Import Functions¶

Before we start, we first import functions that we will use in this tutorial from different libraries.

##### Step 1: import functions #####

from keras.layers import Dense, Flatten, BatchNormalization, Activation, Conv2D, AveragePooling2D, Input

from keras.models import load_model, Model

from keras.optimizers import Adam

from keras.callbacks import ModelCheckpoint, EarlyStopping

from keras import backend as K

import pickle

import pandas as pd

import numpy as np

from numpy.random import seed; seed(111)

import random

import matplotlib.pyplot as plt

import seaborn as sns

from tensorflow import set_random_seed; set_random_seed(111)

from sklearn.metrics import roc_curve, auc

from sklearn.externals.six import StringIO

from sklearn.tree import export_graphviz, DecisionTreeRegressor

from scipy.stats import ttest_ind

from IPython.display import Image

import pydotplus

Step 2: Load data¶

We load the data, which are stored in the "allData.obj" file. The data includes three parts, meta-data, CyTOF data, and marker names.

- The CyTOF data contains the single-cell profile of 27 markers. For the convenience of this tutorial, we already downloaded the fcs files from ImmPort and preprocessed the data into Numpy arrays. See an example for the preprocessing of the FCS files. The dimension of the Numpy array is 472 samples x 10000 cells x 27 markers.

- The metadata contains the sample level information, including the study accession number for each sample and the ground truth of CMV infection. It is stored as a pandas data frame.

- The marker names contain the name of the 27 markers.

##### Step 2: load data #####

#Download data

tutorial_files = ! ls Data

if "allData.obj" not in tutorial_files:

print("Downloading Data:")

! wget https://storage.googleapis.com/deep_learning_cytof/allData.obj -P ./Data

#load data

allData = pickle.load( open( "Data/allData.obj", "rb" ) )

metaData = allData["metaData"]

cytoData = allData["cytoData"]

markerNames = allData["markerNames"]

# inspect the data

print("\nFirst 5 rows of metaData: ")

print(metaData.head(),"\n")

print("Dimensions of cytoData: ",cytoData.shape,"\n")

print("Names of the 27 makers: \n",markerNames.values)

Step 3: Split data into training, validation and testing sets¶

Now, lets split the data into training, validation, and testing sets. The training data is used to train the deep learning model. The validation dataset is used to select the best parameters for the model and to avoid overfitting. The test dataset is used to evaluate the performance of the final model.

The CyTOF dataset contains samples from 9 studies available on ImmPort. We will use samples from the study SDY515 as a validation set, samples from the study SDY519 as a testing set, and the rest of the samples as a training set.

##### Step 3: split train, validation and test######

y = metaData.CMV_Ab.values

x = cytoData

train_id = (metaData.study_accession.isin(["SDY515","SDY519"])==False)

valid_id = metaData.study_accession=="SDY515"

test_id = metaData.study_accession =="SDY519"

x_train = x[train_id]; y_train = y[train_id]

x_valid = x[valid_id]; y_valid = y[valid_id]

x_test = x[test_id]; y_test = y[test_id]

Step 4: Define the deep learning model¶

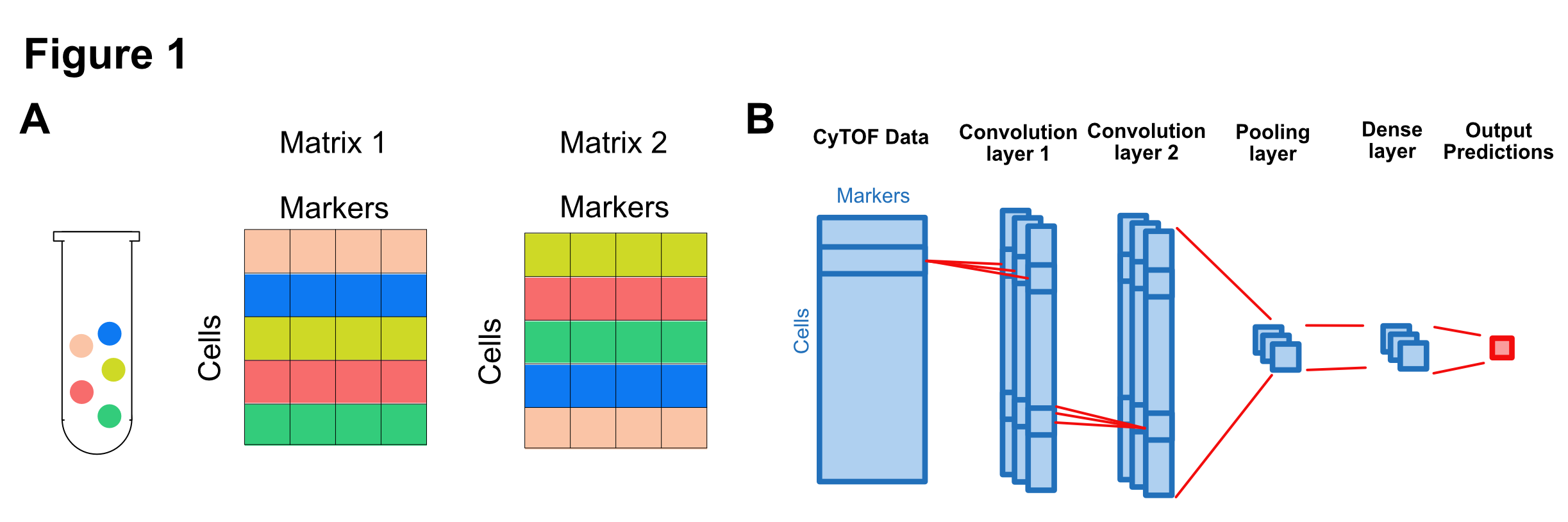

We will use a customized convolution neural network (CNN) to analyze the CyTOF data. For each sample, the CyTOF data is a matrix with rows as cells and columns as markers. It is crucial to notice that the CyTOF data is an unordered collection of cells (rows). For example, both matrix 1 and matrix 2 profiles the same sample in Figure 1A, even though they have different orders of rows.

Based on the characteristics of the CyTOF data, we design a CNN model that is invariant to the permutation of rows. The model contains six layers: input layer, first and second convolution layer, pooling layer, dense layer, and output layer.

The input layer receives the CyTOF data matrix.

The first convolution layer uses three filters to scan each row of the CyTOF data. This layer extracts relevant information from the cell marker profile of each cell.

The second convolution layer uses three filters to scan each row of the first layer's output. Each filter combines information from the first layer for each cell.

The pooling layers averages the outputs of the second convolution layer. The purpose is to aggregate the cell level information into sample-level information.

The dense layer further extracts information from the pooling layer.

The output layer uses logistic regression to report the probability of CMV infection for each sample.

##### Step 4: define model #####

# input

model_input = Input(shape=x_train[0].shape)

# first convolution layer

model_output = Conv2D(3, kernel_size=(1, x_train.shape[2]),

activation=None)(model_input)

model_output = BatchNormalization()(model_output)

model_output = Activation("relu")(model_output)

# sceond convolution layer

model_output = Conv2D(3, (1, 1), activation=None)(model_output)

model_output = BatchNormalization()(model_output)

model_output = Activation("relu")(model_output)

# pooling layer

model_output = AveragePooling2D(pool_size=(x_train.shape[1], 1))(model_output)

model_output = Flatten()(model_output)

# Dense layer

model_output = Dense(3, activation=None)(model_output)

model_output = BatchNormalization()(model_output)

model_output = Activation("relu")(model_output)

# output layer

model_output = Dense(1, activation=None)(model_output)

model_output = BatchNormalization()(model_output)

model_output = Activation("sigmoid")(model_output)

Step 5: Fit the model¶

In this step, we will use the training data to fit the model. We will use the Adam algorithm, which is an extension of the gradient descent method to train our model. Adam algorithm will search the model space step by step (epochs) until the optimal model is identified. At each step, we will use validation data to evaluate the performance of the model. The best model will be saved.

##### Step 5: Fit model #####

# specify input and output

model = Model(inputs=[model_input],

outputs=model_output)

# define loss function and optimizer

model.compile(loss='binary_crossentropy',

optimizer=Adam(lr=0.0001),

metrics=['accuracy'])

# save the best performing model

checkpointer = ModelCheckpoint(filepath='Result/saved_weights.hdf5',

monitor='val_loss', verbose=0,

save_best_only=True)

# model training

model.fit([x_train], y_train,

batch_size=60,

epochs=500,

verbose=1,

callbacks=[checkpointer],

validation_data=([x_valid], y_valid))

Step 6: Plot the training history¶

We can view the training history of the model by plotting the performance (value of the loss function) for training and validation data in each epoch.

##### Step 6: plot train and validation loss #####

plt.plot(model.history.history['loss'])

plt.plot(model.history.history['val_loss'])

plt.title('model train vs validation loss')

plt.ylabel('loss')

plt.xlabel('epoch')

plt.legend(['train', 'validation'], loc='upper right')

plt.show()

Step 7: Evaluate the performance using test data¶

We load the final model from a save file (Final_weights.hdf5) for the following analysis steps. We will use the test data, which has not been touched so far, to evaluate the performance of the final model. We will draw a Receiver Operator Characteristic(ROC) Curve and use Area Under the Curve (AUC) to measure performance.

##### Step 7: test the final model #####

# load final model

final_model = load_model('Data/Final_weights.hdf5')

# generate ROC and AUC

y_scores = final_model.predict([x_test])

fpr, tpr, _ = roc_curve(y_test, y_scores)

roc_auc = auc(fpr, tpr)

# plot ROC curve

plt.plot(fpr, tpr)

plt.plot([0, 1], [0, 1], 'k--')

plt.xlabel('False Positive Rate')

plt.ylabel('True Positive Rate')

plt.title('AUC = {0:.2f}'.format(roc_auc))

plt.show()

Step 8: Interpret the deep learning model.¶

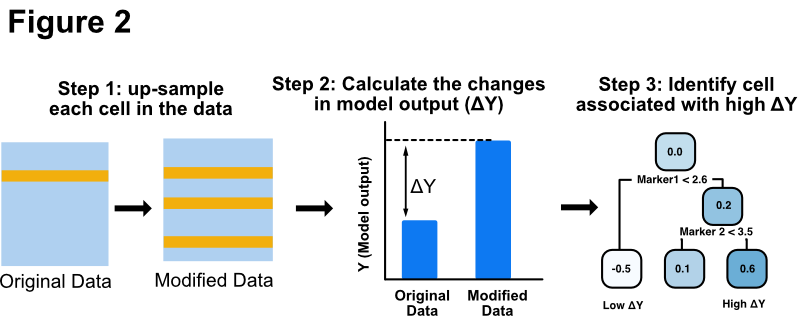

We will use a permutation-based method to interpret the deep CNN model (Fig. 2). For each cell in cytometry data, we up-sampled the cell by copying it to replace other randomly chosen cells within the sample. We then applied the deep CNN model on both the original data and the permuted data. The difference in the model output (ΔΥ) quantifies the impact of the cell on the output of the deep learning model. Finally, we build a decision tree model to idenitfy cell subsets that have the highest ΔΥ.

##### Step 8: Interpret the deep learning model. #####

# warning: may take a long time (around 30 mins) to run

# Calculate the impact of each cell on the model output

dY = np.zeros([x_test.shape[0],x_test.shape[1]])

s1 = np.random.randint(0,(x_test.shape[1]-1),int(x_test.shape[1]*0.05))

for i in range(x_test.shape[0]):

pred_i = final_model.predict([x_test[[i],:,:,:]])

for j in range(x_test.shape[1]):

t1 = x_test[[i],:,:,:].copy()

t1[:,s1,:,:] = t1[:,j,:,:]

pred_j = final_model.predict([t1])

dY[i,j] = pred_j-pred_i

# reformat dY

x_test2 = x_test.reshape((x_test.shape[0]*x_test.shape[1],27))

dY = dY.reshape([x_test.shape[0]*x_test.shape[1]])

# Build decision tree to identify cell subset with high dY

regr_1 = DecisionTreeRegressor(max_depth=4)

regr_1.fit(x_test2, dY)

# Plot the decision tree

dot_data = StringIO()

export_graphviz(regr_1, out_file=dot_data,

feature_names= markerNames,

filled=True, rounded=True,

special_characters=True)

graph = pydotplus.graph_from_dot_data(dot_data.getvalue())

Image(graph.create_png())

Conclusion¶

In this tutorial, we build a deep convolutional neural network (CNN) to analyze CyTOF data. The deep CNN model is able to diagnose latent CMV infection with high accuracy. In addition, we were able to interpret the deep learning model using a permutation-based method. We discovered that a CD3+ CD8+ CD27- CD94+ population that have the highest impact on the deep CNN model.