Multiple Methods for Downloading and Analyzing ImmPort data in Python¶

During this tutorial we will explore 3 methods that can be used to download information from ImmPort and methods to format the data, so it is available for analysis. Details on ways to analyze the data were detailed in both an R and Python tutorials which are available at immport.org. A Python version of this tutorial is available, which is a very good source of information on both downloading and analysis of ImmPort data, and is the basis for much of the code in this tutorial. The original Python tutorial is available in HTML and in Jupyter Notebook formats.

This tutorial only focuses on the download and preparation of data for analysis, not the actual analyis of the data. We should also point out the choice of analysis language is left to the researcher, but this tutorial shows how this can be accomplished using the Python language. The plans are to produce a similar tutorial using R.

Overview of Access to ImmPort data¶

There are 3 alternative methods for downloading data from ImmPort, which one to choose is up to the researcher, but there are advantages for each method depending on your analysis plan.

Method 1: Download using the ImmPort Data Browser¶

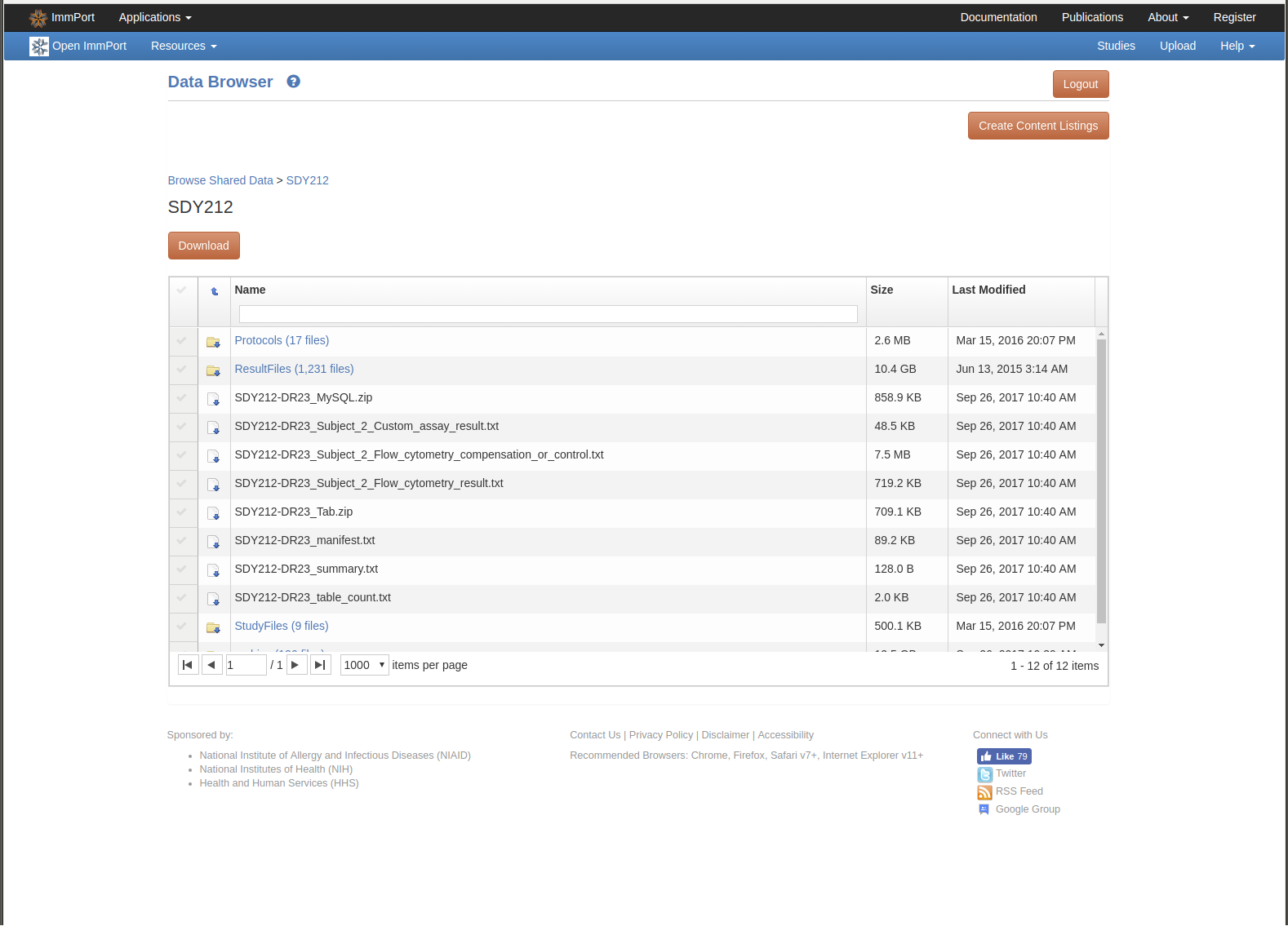

The ImmPort Data Browser is a web based application that allows the user to download individual files or folders of files and might be a good choice for beginning data scientists. The screen shot below shows the starting screen for downloading data for SDY212.

For this tutorial we will be downloading the SDY212-DR23_Tab.zip file. This zip package contains all the data files for SDY212 in TSV format. Each file represents the content of a database table containing rows of information for study SDY212. An overview of the ImmPort database model is available here and detailed table information is available here. One advantge to this method is you can get all the files you may need with one click.

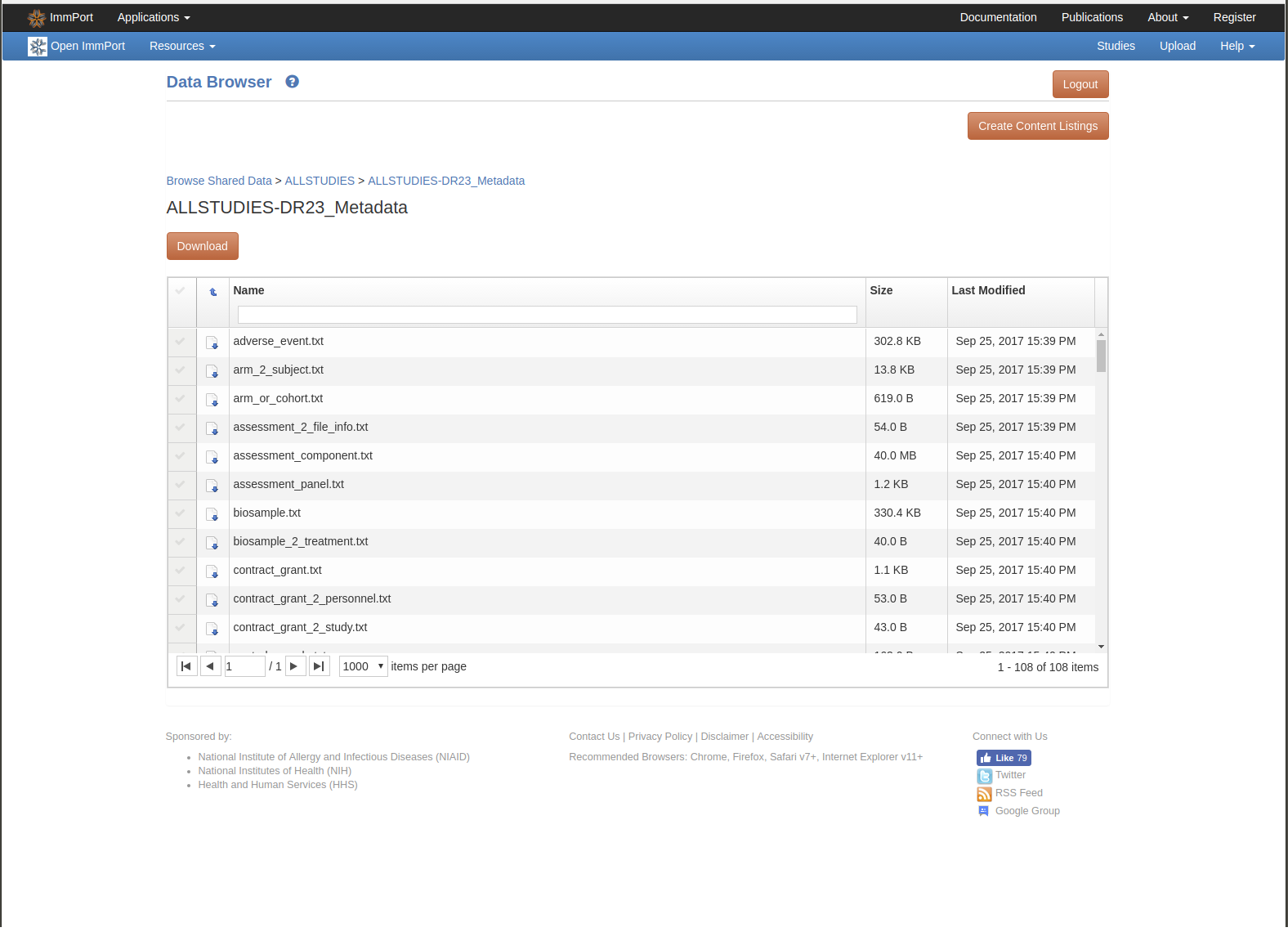

If you are interested in doing cross study analysis, there is an ALLSTUDIES-DRXX_Tab.zip file that contains files the have the data for all the studies. Another alternative is to download individual files which are in the ALLSTUDIES/ALLSTUDIES-DRXX_Metadata folder. Example screen shot below.

Method 2: Download using the ImmPort File Download API¶

This method allows the user to access files of interest using a programatic API to download files. A bash shell script has been developed that can be used to download files, and this tutorial will illustrate how you can download files using Python. More details on how to use Python will be in the section for this method. One advantage of this method is you can download individual files and can accomplish this programatically rather than using a web interface.

The ImmPort files are hosted and downloaded using an Aspera files system. Aspera is an application for greatly increasing the performance when downloading large files. The Aspera company provides executables that are packaged in the Shell script zip package and must be downloaded from ImmPort.

Method 3: Download using the ImmPort Query API¶

This method allows the user to obtain data from a REST API. Currently this REST API supports downloading assay results for experiments using: Elisa, Elispot, HAI, etc. If you are interested in downloading raw experiment files like FCS files, there is an API call to support this need. There will be a small example of how to use this API method. The types of REST methods available and the types of filters you can add to your query are detailed in another document. Because the information returned by the API methods contains many metadata elements, that may be contained in 4 files in either method 1 or 2, it may be easier to start with this method, until you are comfortable with Python Pandas or R DataFrames, to merge multiple files into one coherent data set.

Getting Started¶

The section below sets up the Python environment that is used by all the methods. This tutorial assumes you have downloaded the File Download Tool distribution available from ImmPort, that contains the bash shell script, the immport_download.py file and the Aspera executables. The immport_download.py file contains convience functions access the API, please review if you want more details. The tutorial assumes you have unzipped the distribution in a directory at the same level of notebook directory.

import sys

import os

import pandas as pd

# Set the Python path to the location of the directory containing "immport_download.py"

immport_download_code = "../bin/"

sys.path.insert(0,immport_download_code)

os.chdir(immport_download_code)

import immport_download

Example Configuration Properties¶

user_name = "REPLACE"

password = "REPLACE"

download_directory = "../output"

data_directory = "../data"

sdy212_directory= "../data/SDY212-DR23_Tab/Tab"

Method 1: Download using the ImmPort DataBrowser¶

In preparation for this step, the SDY212-DR23_Tab.zip file was downloaded using the DataBrowser and unzipped into the ../data/SDY212-DR23_Tab/Tab directory. The following 4 files from this directory will be used:

- subjects.txt - general subject demographic information.

- arm_2_subject.txt - mapping from subjects to study arms/cohorts.

- arm_or_cohort - study arm names and descriptions

- hai_results.txt - HAI results

More details on the process for preparing the information from these files and analysis are available in the tutorial mentioned in the top of this tutorial.

# To view the contents of the ../data/SDY212-DR23_Tab/Tab directory, uncomment the command below

# %ls $sdy212_directory

Read in the 4 files and load into Panda's Data Frames¶

subject_file = sdy212_directory + "/subject.txt"

arm_2_subject_file = sdy212_directory + "/arm_2_subject.txt"

arm_or_cohort_file = sdy212_directory + "/arm_or_cohort.txt"

hai_result_file = sdy212_directory + "/hai_result.txt"

subjects = pd.read_table(subject_file, sep="\t")

arm_2_subject = pd.read_table(arm_2_subject_file, sep="\t")

arm_or_cohort = pd.read_table(arm_or_cohort_file, sep="\t")

hai_results = pd.read_table(hai_result_file,sep="\t")

Review content of the 4 Data Frames¶

subjects.head(5)

arm_2_subject.head(5)

arm_or_cohort.head()

hai_results.head()

# To review the columns for all data frames

print(subjects.columns)

print(arm_2_subject.columns)

print(arm_or_cohort.columns)

print(hai_results.columns)

Clean up and merge data for analysis¶

The steps below will remove columns not needed for analysis, plus we will assign more meaningfull labels to the ARM names. When we reviewed the arm_or_cohort contents above, we can see that ARM984/Cohort_1 corresponds to the Young particpants and ARM895/Cohort_2 corresponds to the Old participants. Then merge the ARM information with the Subject demographic information.

subjects = subjects[['SUBJECT_ACCESSION','GENDER','RACE']]

arm_2_subject = arm_2_subject[['SUBJECT_ACCESSION','ARM_ACCESSION','MIN_SUBJECT_AGE']]

arm_2_subject['ARM_NAME'] = ""

arm_2_subject.loc[arm_2_subject['ARM_ACCESSION'] == 'ARM894','ARM_NAME'] = "Young"

arm_2_subject.loc[arm_2_subject['ARM_ACCESSION'] == 'ARM895','ARM_NAME'] = "Old"

arm_2_subject_merged = pd.merge(subjects,arm_2_subject, left_on='SUBJECT_ACCESSION', right_on='SUBJECT_ACCESSION')

arm_2_subject_merged.head()

Simple descriptive statistics¶

We will stop here with method one, but the next step would be to merge in the hai_result information into the arm_2_subject_merged data frame, so we could analyze the HAI result information. Details on how to accomplish this plus examples of analysis and plotting of results can be found in the more detailed tutorial mentioned at the beginning of this tutorial.

arm_2_subject_merged.groupby('ARM_NAME').count()['SUBJECT_ACCESSION']

arm_2_subject_merged.groupby('GENDER').count()['SUBJECT_ACCESSION']

Method 2: Download using the ImmPort File Download API¶

For this method, we will be using the files that are available from ALLSTUDIES/ALLSTUDIES-DR23_Metadata directory. We will download the same 4 files that we used for method 1, but will use the Download API to programatically download the files. Because the files contain all the data for all studies, we will then filter out all content not necessary for SDY212 analysis.

immport_download.download_file(user_name,password,

"/ALLSTUDIES/ALLSTUDIES-DR23_Metadata/subject.txt",download_directory)

immport_download.download_file(user_name,password,

"/ALLSTUDIES/ALLSTUDIES-DR23_Metadata/arm_2_subject.txt",download_directory)

immport_download.download_file(user_name,password,

"/ALLSTUDIES/ALLSTUDIES-DR23_Metadata/arm_or_cohort.txt",download_directory)

immport_download.download_file(user_name,password,

"/ALLSTUDIES/ALLSTUDIES-DR23_Metadata/hai_result.txt",download_directory)

Check that download was successful¶

%ls ../output

Read in the 4 files and load into Panda's Data Frames, filter to SDY212¶

For this step we will follow a similar process that was used for method 1 to build our analysis data frame, with the added filtering to only include information from SDY212. We will simplify the filtering because we already know that for SDY212 the 2 ARM_ACCESSION's we are interested in are ARM894 and ARM895.

subject_file = download_directory + "/subject.txt"

arm_2_subject_file = download_directory + "/arm_2_subject.txt"

arm_or_cohort_file = download_directory + "/arm_or_cohort.txt"

hai_result_file = download_directory + "/hai_result.txt"

subjects = pd.read_table(subject_file, sep="\t")

arm_2_subject = pd.read_table(arm_2_subject_file, sep="\t")

arm_or_cohort = pd.read_table(arm_or_cohort_file, sep="\t")

hai_results = pd.read_table(hai_result_file,sep="\t")

subjects = subjects[['SUBJECT_ACCESSION','GENDER','RACE']]

arm_2_subject = arm_2_subject[['SUBJECT_ACCESSION','ARM_ACCESSION','MIN_SUBJECT_AGE']]

# Filter only records for SDY212 using ARM_ACCESSION

arm_2_subject = arm_2_subject[arm_2_subject['ARM_ACCESSION'].isin(['ARM894','ARM895'])]

arm_2_subject.loc[arm_2_subject['ARM_ACCESSION'] == 'ARM894','ARM_NAME'] = "Young"

arm_2_subject.loc[arm_2_subject['ARM_ACCESSION'] == 'ARM895','ARM_NAME'] = "Old"

arm_2_subject_merged = pd.merge(subjects,arm_2_subject, left_on='SUBJECT_ACCESSION', right_on='SUBJECT_ACCESSION')

arm_2_subject_merged.head()

Simple Descriptive Statistics¶

At this point we should have built a data frame with the same content as in method 1, so we will look at a few descriptive statistics to confirm.

arm_2_subject_merged.groupby('ARM_NAME').count()['SUBJECT_ACCESSION']

arm_2_subject_merged.groupby('GENDER').count()['SUBJECT_ACCESSION']

Method 3: Download using the ImmPort Query API¶

This method will use the Query API to download a JSON file of HAI results for SDY212. Because the JSON file contains all the columns we need for analysis, this will simplify the creation of the data frame for analysis. The final counts for the descriptive statistics, may be slightly off, but this is expected because not all the subjects in the 2 ARM's have HAI results.

# Request a token, then make API call, then load into Pandas's DataFrame

token = immport_download.request_immport_token(user_name, password)

r = immport_download.api("https://api.immport.org/data/query/result/hai?studyAccession=SDY212",token)

hai_results = pd.read_json(r)

hai_results.columns

Remove column and rename to match names in the first 2 methods¶

arm_2_subject_merged = hai_results[['subjectAccession','armAccession','minSubjectAge','gender','race']]

arm_2_subject_merged=arm_2_subject_merged.rename(columns={'subjectAccession':'SUBJECT_ACCESSION'})

arm_2_subject_merged=arm_2_subject_merged.rename(columns={'armAccession':'ARM_ACCESSION'})

arm_2_subject_merged=arm_2_subject_merged.rename(columns={'minSubjectAge':'MIN_SUBJECT_AGE'})

arm_2_subject_merged=arm_2_subject_merged.rename(columns={'gender':'GENDER'})

arm_2_subject_merged=arm_2_subject_merged.rename(columns={'RACE':'RACE'})

arm_2_subject_merged.loc[arm_2_subject_merged['ARM_ACCESSION'] == 'ARM894','ARM_NAME'] = "Young"

arm_2_subject_merged.loc[arm_2_subject_merged['ARM_ACCESSION'] == 'ARM895','ARM_NAME'] = "Old"

arm_2_subject_merged.head()

Simple Descriptive Statistics¶¶

At this point we should have built a data frame with the similar content as in method 1 and 2, so we will look at a few descriptive statistics to confirm. Because each subject may have multiple HAI results, we need to remove duplicates.

arm_2_subject_merged[['ARM_NAME','SUBJECT_ACCESSION']].drop_duplicates().groupby('ARM_NAME').count()

arm_2_subject_merged[['GENDER','SUBJECT_ACCESSION']].drop_duplicates().groupby('GENDER').count()

Download Files Using Data Query and File Download API¶

In this final section, we have an example of using the Query API to identify flow cytometry FCS files for SDY212 and ARM Cohort_1 using the filePath API method. Then once we have identified the file paths for these files of interest, we will download them using the Download API. We will only download 5 files for this example.

token = immport_download.request_immport_token(user_name, password)

r = immport_download.api("https://api.immport.org/data/query/result/filePath?studyAccession=SDY212&armName=Cohort_1&measurementTechnique=Flow%20cytometry",token)

df = pd.read_json(r)

df = df[df['fileDetail'] == "Flow cytometry result"]

df_fcs = df[['subjectAccession','armAccession','armName','gender','race','minSubjectAge','studyTimeCollected', \

'fileDetail','filePath']]

df_fcs.head()

unique_file_paths = df_fcs.filePath.unique()

unique_fcs_paths = [path for path in unique_file_paths if path.endswith(".fcs")]

for i in range(0,5):

print("Downloading: ",unique_fcs_paths[i])

immport_download.download_file(user_name,password,

unique_fcs_paths[i],download_directory)

%ls ../output